- Multiple Shapefile data sources access programmatically online and converted to points in Arcpy, then mapped with ArcGIS pro

What is Arcpy?:¶

Arcpy is a Python library for automating GIS tasks in Arc GIS Pro

- Collection of modules, functions, and classes from the ArcGIS toolbox

- It allows you to perform geoprocessing, analysis, data management and mapping automation using python

In [1]:

import arcpy # allows for access to ArcGIS pro geoprocessing tools and workflow automation

import os # enables interaction with local system resources (paths to folders and files)

import requests # access data from the web

import zipfile ## process and extract downloaded zipfiles

import pandas as pd ## use to check spreadsheet formatting

initial_dir= os.getcwd() # capture the initial directory before setting environment for arcpy

print("Initial working directory: ", initial_dir)

What we did last time¶

- Created a custom function to pull a countytownship shapefile and load dataset into our local geodatabase

- Perform several QC checks on attributes and geometry

- Created a unique key by joining attributes

- Performed Spatial Join within Arcpy

What we will do this time:¶

- Update Features using Arcpy

- Perform Spatial Analysis within Arcpy taking each water well's latest reading per polygon

Create and re-use Custom Functions to Save time¶

When you create a python script, the functions within those scripts can be loaded into other scripts

To import your script, do as you would any library in python

python import my_script as ms

Now the functions within this script are available to use

python ms.my_function()

- Not used here but when multiple script hold different tools you'd be better served using a package and

__init__.pysetup

In [2]:

## Let's load in our custom tool

import custom_arcpy_tools as cat

In [ ]:

# note: importing the custom tool sets a variable called initial_dir to the path of the script

# this is intentionally done to support its functionality i.e., creating folders to store data

In [3]:

cat.listFC_dataset_Attributes()

## We purposefully caused an error!

# Why? To demonstrate that the error is due to workspace not being set in this new notebook or script

In [ ]:

# See the ValueError?

### Don't remember the arguments for this function?

### Use this:

# help(function_name)

In [ ]:

help(cat.listFC_dataset_Attributes) ## use help(function_name) to get the documentation for the function

In [ ]:

# Let's change the directory to where we have a geodatabase with some feature classes to work with

# Note: % is a magic command to access local system file and folders

%cd ./02 Result

In [4]:

# list the files

%ls

In [ ]:

## should see a file ending with .gdb. Copy the folder path and add its name below

In [5]:

## Use the gdb name in the folder, and set the path to the gdb

###====================== Set up file paths

gdb_path = r"c:\Projects\my_git_pages_website\Py-and-Sky-Labs\content\ArcPY\02 Result\MN_WaterContamination.gdb"

##===================== Define the path to script and project folder

# set the folder path as the working environment, and store it for later use

try:

arcpy.env.workspace = gdb_path

wksp = arcpy.env.workspace

except Exception as e:

print(f"Error setting up the path, {e}, Exception type: {type(e).__name__}") # python's built-in error messaging, {e} prints description and type(e).__name__ category

print("Working environment is here",wksp)

## Modify the env attribute to allow overwriting output files

arcpy.env.overwriteOutput= True

In [6]:

arcpy.Exists(wksp) ## similar to using print statement following if not os.path.exists(gdb_path)

Out[6]:

In [7]:

# Now. Retry the function that failed earlier

cat.listFC_dataset_Attributes()

Data Sources:¶

- Geography: MN

| Input Name | file types | Coordinate System | Source |

|---|---|---|---|

| MN PFAS levels | 1 excel file | GCS: NAD 83 | Report |

| MN Groundwater contamination atlas | 6 shape files | PCS: NAD 83 UTM Zone 15N | MetaData |

| MN Cities and townships | 1 shapefile | PCS: NAD 83 UTM Zone 15N | MetaData |

- We have already pulled and done some initial processing of all these datasets in the previous walkthroughs

- CTU poygon dataset was spatially joined to the MN wells dataset, bucketing wells by CTU

- Now will go into analysis of joined data

In [8]:

# quick reminder of where our current workspace is set

print("Data Folder is here" , wksp)

# let's call it gdb for clarity

gdb=wksp

In [9]:

## Count initial records

# first check the join fc: County/Township/Unorganized territory (CTU)

fc= "city_township_unorg"

print(f"Total records in {fc} feature class is: {int(arcpy.management.GetCount(fc)[0])}")

In [10]:

## check the target input fc

for fc in arcpy.ListFeatureClasses("*wells_result_sum*"):

print(f"Feature class: {fc}\n Total Record Count: {int(arcpy.management.GetCount(fc)[0])}")

In [11]:

fc ="city_township_unorg"

cat.showFieldinfo(fc)

In [ ]:

# Number of unique geometries or shapes in the layer (polygons in this case) matches total record count

# Each row represents a unique CTU location, as evident by the number of unique geometries and lack of overlap between them

# Combined_GNIS_ID is the corresponding unique attribute field marking each of those unique polygons

In [12]:

cat.open_arcgis_documentation()

Out[12]:

Approach¶

- Add Field --> Summarize Data to get the Latest Sampling Date by well and polygon

In [ ]:

## We have already spatially joined a point layer of ground water well sites and a polygon layer of CTU locations

# Next identify unique well sites within each CTU

# Define a unique well:

# A spatially unique well is a unique combination of CTU Feature ID (GNIS_FEATURE_ID) + County ID (COUNY_GNIS_ID) + Well ID (sys_loc_co)

## We need to

# 1) Create a new field that will mark each each spatially unique well within each polygon of the CTU layer

# 2) Join modified CTU layer with the Wells Data

# 3) Create a table or view of the joined dataset that selects only the most recent sampling date for each unique well

In [15]:

import custom_arcpy_tools as cat

cat.listFC_dataset_Attributes(wksp)

Add a Join Key Field¶

In [13]:

fc = "MN_WaterContamination_SpatialJoin"

for field in arcpy.ListFields(fc):

print(field.name)

In [14]:

fc = "MN_WaterContamination_SpatialJoin"

newfield= "JoinKey"

try:

if newfield not in [field.name for field in arcpy.ListFields(fc)]:

## a new field

arcpy.management.AddField(fc,newfield,"TEXT",field_length=50)

print(f"New {newfield} field added")

with arcpy.da.UpdateCursor(fc,["sys_loc_co","Combined_GNIS_IDs", newfield]) as cursor:

for row in cursor:

well_id= str(row[0])

combined_id= str(row[1])

row[2] = f"{well_id}_{combined_id}"

cursor.updateRow(row)

print("Successfully updated the table for the new field")

else:

print(f"{newfield} aleady exists in the target dataset {fc}")

except Exception as e:

print("Error occured", e , type(e).__name__)

except arcpy.ExecuteError:

print("Arcpy Error", arcpy.GetMessages(2))

Summary Statistics¶

arcpy.analysis.Statistics()¶

- Calculate summary statistics for fields in a table

- arcpy.analysis.Statistics(in_table, out_table, statistics_fields, {case_field}, {concatenation_separator})

- Key Arguments:

- in_table: input table with fields to be used to compute statistics

- out_table: output table to store the calculated results

- statistics_field: Field or fields containing attribute values to be used and statistic to be used

- Null values are excluded

- Can do multiple combinations of statistics and fields

- Format: [[field, {statistic_type}],...]

- case_field: Field or combination of fields that will be used to group the data when calculating statistics

- if not specified the statistics are calculated for all records

- the output will be a single record

- Key Arguments:

In [ ]:

## Calculate summary stats

fc = "MN_WaterContamination_SpatialJoin"

newfield= "JoinKey" # Field to be used to summarize or group the data

statisticfield= "latest_sam" # date field

try:

### TOOL: arcpy.analysis.Statistics(in_table, out_table, statistics_fields, {case_field}, {concatenation_separator})

arcpy.analysis.Statistics(fc, "MN_WaterContamination_Stats",[[statisticfield,"MAX"]], case_field= newfield)

print("Statistics Successfully calculated for", fc)

except Exception as e:

print("Error occured", e, type(e).__name__)

except arcpy.ExecuteError:

print("Arcy Error", arcpy.GetMessages(2))

In [17]:

arcpy.Exists("MN_WaterContamination_Stats")

Out[17]:

In [18]:

fc = "MN_WaterContamination_Stats"

record_count = arcpy.management.GetCount(fc)[0]

print("Record Count", record_count)

In [ ]:

### 11275 is the count of records for unique wells within each CTU by their most recent sampling date

# This is the level or grain our datasets needs to be in to map well water site contamination by CTU

# one row = a unique well within a unique CTU for the most recent sampling date

Join Statistics Table to Spatial Data Layer¶

arcpy.management.AddJoin()¶

- Join a layer to another layer or to a table based on a common field

- supports Feature layers, table views, and raster layers with attribute tables

- Result is temporary and must be saved (e.g., CopyFeatures, ExportFeatures, ExportTable)

- arcpy.management.AddJoin(in_layer_or_view, in_field, join_table, join_field, {join_type}, {index_join_fields}, {rebuild_index}, {join_operation})

In [ ]:

## We have already spatially joined a point layer of ground water well sites and a polygon layer of CTU locations

# Next identify unique well sites within each CTU

# Define a unique well:

# A spatially unique well is a unique combination of CTU Feature ID (GNIS_FEATURE_ID) + County ID (COUNY_GNIS_ID) + Well ID (sys_loc_co)

## We need to

# 1) Create a new field that will mark each each spatially unique well within each polygon of the CTU layer

# 2) Join modified CTU layer with the Wells Data

# 3) Create a table or view of the joined dataset that selects only the most recent sampling date for each unique well

fc = "MN_WaterContamination_SpatialJoin"

newfield= "JoinKey"

try:

if newfield not in [field.name for field in arcpy.ListFields(fc)]:

## a new field

arcpy.management.AddField(fc,newfield,"TEXT",field_length=50)

print(f"New {newfield} field added")

with arcpy.da.UpdateCursor(fc,["sys_loc_co","Combined_GNIS_IDs", newfield]) as cursor:

for row in cursor:

well_id= str(row[0])

combined_id= str(row[1])

row[2] = f"{well_id}_{combined_id}"

cursor.updateRow(row)

print("Successfully updated the table for the new field")

else:

print(f"{newfield} aleady exists in the target dataset {fc}")

except Exception as e:

print("Error occured", e , type(e).__name__)

except arcpy.ExecuteError:

print("Arcpy Error", arcpy.GetMessages(2))

## Calculate summary stats

fc = "MN_WaterContamination_SpatialJoin"

newfield= "JoinKey" # Field to be used to summarize or group the data

statisticfield= "latest_sam" # date field

try:

### TOOL: arcpy.analysis.Statistics(in_table, out_table, statistics_fields, {case_field}, {concatenation_separator})

arcpy.analysis.Statistics(fc, "MN_WaterContamination_Stats",[[statisticfield,"MAX"]], case_field= newfield)

print("Statistics Successfully calculated for", fc)

except Exception as e:

print("Error occured", e, type(e).__name__)

except arcpy.ExecuteError:

print("Arcy Error", arcpy.GetMessages(2))

### 11275 is the count of records for unique wells within each CTU by their most recent sampling date

# This is the level or grain our datasets needs to be in to map well water site contamination by CTU

# one row = a unique well within a unique CTU for the most recent sampling date

### Join two tables based on a common field and its values

in_fc= "MN_WaterContamination_SpatialJoin" # input table

in_join_key= "JoinKey"

join_table = "MN_WaterContamination_Stats" # Join table

join_table_key= "JoinKey"

try:

### TOOL: arcpy.management.AddJoin(in_layer_or_view, in_field, join_table, join_field, {join_type}, {index_join_fields}, {rebuild_index}, {join_operation})

arcpy.management.AddJoin(in_fc,in_join_key, join_table, join_table_key)

print(f"Successfully Joined {in_fc} and {join_table}")

print(arcpy.GetMessages(0))

except arcpy.ExecuteError:

print("Arcy Error: ", arcpy.GetMessages(2))

except Exception as e:

print("Error occured", e, type(e).__name__)

### We added the Max Date from the stats table to our joined data, now we will use this to refine our joined dataset

# if the Joined Layer has different dates for each well, then we should be able to filter it using this max date field

# would give us the grain of data we need: one row is one unique well within one unique CTU for the latest sampling date

In [ ]:

### We added the Max Date from the stats table to our joined data, now we will use this to refine our joined dataset

# if the Joined Layer has different dates for each well, then we should be able to filter it using this max date field

# would give us the grain of data we need: one row is one unique well within one unique CTU for the latest sampling date

Select the latest water sampling dates using Select By Attributes¶

arcpy.management.SelectLayerByAttribute()¶

- Adds updates, or removes a selection based on an attribute query

- Only works on feature layers or table views!

- If the query is present only those features will be used in downstream processes

- arcpy.management.SelectLayerByAttribute(in_layer_or_view, {selection_type}, {where_clause}, {invert_where_clause})

In [58]:

in_fc= "MN_WaterContamination_SpatialJoin" # input table

out_lyr= "MN_WaterContamination_joined_layer"

#

### Join two tables based on a common field and its values

in_join_key= "JoinKey"

join_table = "MN_WaterContamination_Stats" # Join table

join_table_key= "JoinKey"

try:

### TOOL: arcpy.management.AddJoin(in_layer_or_view, in_field, join_table, join_field, {join_type}, {index_join_fields}, {rebuild_index}, {join_operation})

arcpy.management.MakeFeatureLayer(in_fc, out_lyr)

arcpy.management.AddJoin(out_lyr,in_join_key, join_table, join_table_key)

print(f"Successfully Joined {out_lyr} and {join_table}")

print(arcpy.GetMessages(0))

where_c = "latest_sam = MAX_latest_sam"

arcpy.management.SelectLayerByAttribute(out_lyr, "NEW_SELECTION", where_c)

print(f"Successfully selected features in {out_lyr}")

print(arcpy.GetMessages(0))

arcpy.management.CopyFeatures(out_lyr, "MN_latest_WaterSamples_Joined_lyr")

print(f"Successfully copied features in {out_lyr}")

print(arcpy.GetMessages(0))

except arcpy.ExecuteError:

print("Arcy Error: ", arcpy.GetMessages(2))

except Exception as e:

print("Error occured", e, type(e).__name__)

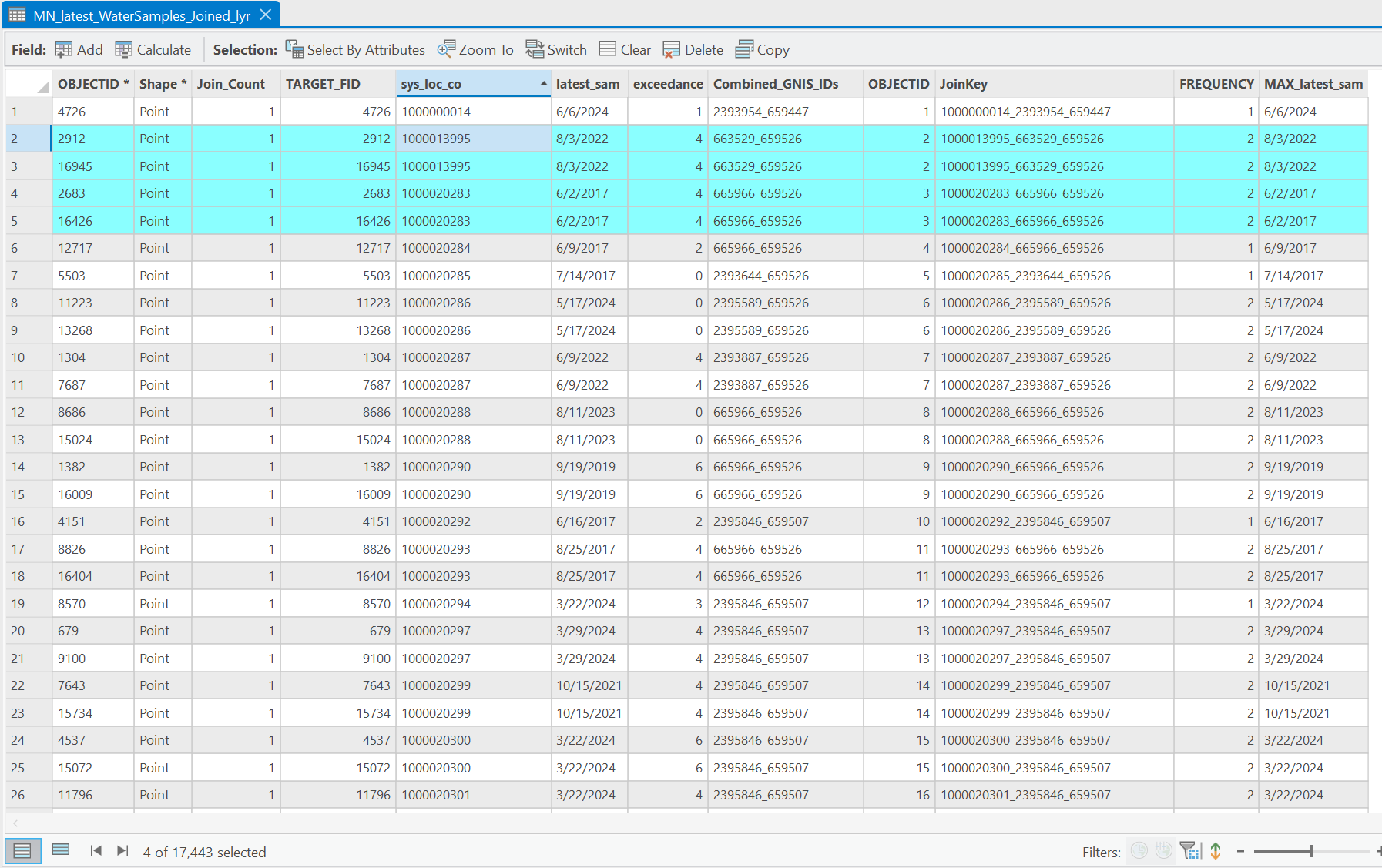

Check Results¶

- The attribute table of a joined dataset comprised of water well points enriched with latest Date for each well ID

- Our goal is to have one row for each unique well site with its latest sampling date

In [ ]:

### When we added the Max Sampling date, did we change the grain of the well water point layer from unique well IDs to unique wells IDs within a CTU polygon?

## No.

# What about those well IDs with multiple records of the same dates for the same CTU?

In [ ]:

## We could have gone a different way and deduplicated the dataset before adding the table, giving us the desired result of one row per unique well

# But for learning purposes our process is more informative

# Now let's understand a bit more about the data and clean up this result

In [ ]:

### check duplicate dates for each unique well id.

from collections import Counter

fc= "MN_WaterContamination_SpatialJoin" # we could have used the newly created layer

dup_date_counter = Counter() # Counter dictionary enables us to collect and count the number of times unique keys appear (key:key_counts)

field_name= "sys_loc_co"

with arcpy.da.SearchCursor(fc, [field_name,"JoinKey","latest_sam"]) as cursor:

for row in cursor:

key = (row[0],row[1],row[2])

dup_date_counter[key]+= 1 # count each time we see the same well id + ctu_id + date

count_printedrows = 0 # counter to limit printed duplicates

for id,count in dup_date_counter.items():

## print the well_id_ctu_id_latest_sample date combos that appear more than once

if count >1 and count_printedrows <20:

count_printedrows +=1

print(f"Unique well CTU date combo: {id}, appears {count} times")

In [ ]:

# check the number of records we expect in the new table

cat.showFieldinfo(fc)

In [ ]:

### What happened?

### 11246 unique records exist by well ID alone but we know there are more unique wells if we combine well ID + CTU location ID

# We expected 11275 records because their are 11275 unique joinkey values

# suggests duplicate well IDs

In [ ]:

from collections import defaultdict

well_ctu = defaultdict(set) # stores each well id with a unique CTU ID

fc= "MN_WaterContamination_SpatialJoin"

with arcpy.da.SearchCursor(fc, ["sys_loc_co", "Combined_GNIS_IDS"])as cursor:

for row in cursor:

well_id = row[0]

ctu_id = row[1]

# use the well id as key

well_ctu[well_id].add(ctu_id) # store the CTU ids , if well_id exist with different CTUs they will be added

print("Wells that appear in multiple CTUS\n")

well_ctu_counter = 0

for well_id, ctu in well_ctu.items():

if len(ctu)>1:

print(f"{well_id} found in {len(ctu)} CTU list here\n:{sorted(ctu)}")

well_ctu_counter += 1

print(f"\n{well_ctu_counter} wells found associated with multiple CTUs")

In [45]:

# check why there are less unique sys loc codes then unique well id+ CTU id

fc= "MN_WaterContamination_SpatialJoin"

row_counter = 0

with arcpy.da.SearchCursor(fc, ["JoinKey", "latest_sam"], sql_clause=["DISTINCT" , "ORDER BY SYS_LOC_CO"]) as cursor:

for row in cursor:

row_counter +=1

print(f"{row_counter} total distinct rows")

In [ ]:

### We found a few things that indicate the need to deduplicate the data by combination of well_id + CTU_id

# 1. Same well ids are in different CTU polygons

# 2. Same well id has same date repeated multiple tmes

# 3. The Joinkey retains all the distinct well data by CTU including latest sampling date

Deduplicate Dataset and Create a new Feature Class¶

- Situation:

- Our goal here is to map the maximum reported contamintant exceedance level across all well for each CTU polygon

- Map the distribution of contaminant levels exceeding guidelines as a color gradient for ease of comparison across all CTUs

- The MN Ground Water Contamination Altas Summary dataset contains duplicate well sampling sites with the same values for: well ID, collection date, contaminants, etc

- Our goal here is to map the maximum reported contamintant exceedance level across all well for each CTU polygon

- Task:

- Reduce this dataset down to the only the critical data to display max contaminants for unique wells

- Create a new feature class where one row = a unique well within a unique CTU for the most recent sampling date

####

CreateFeatureClass()

Creates a new feature class (fc) in a geodatabase or shape file in a folder

arcpy.management.CreateFeatureclass(out_path, out_name, {geometry_type}, {template}, {has_m}, {has_z}, {spatial_reference}, {config_keyword}, {spatial_grid_1}, {spatial_grid_2}, {spatial_grid_3}, {out_alias}, {oid_type})

- Key fields

- out_path: the enterprise or file geodatabase or folder to store the output

- out_name: name of the fc to be created

- geometry_type: specify the geometry of the output fc (e.g., POINT, MULTIPOINT, POLYGON, POLYLINE, MULTIPATCH (use for 3D features))

- template: an existing feature class or list of fc to define the attribute fields of the new feature class (see example below)

- spatial_reference: can specify with .prj file, path to a feature class, or defining a spatial reference (optional but if no spatial ref provided will be undefined)

In [ ]:

# List the fields in the fc

fc= "MN_WaterContamination_SpatialJoin" # input layer

print([f.name for f in arcpy.ListFields(fc)]) # list comprehension extracting all field names

In [ ]:

## We can use this approach to acess fields, add fields and to update the order

fc= "MN_WaterContamination_SpatialJoin" # input layer

# extracrt all the fields in the fc into a list

orig_fields = [f.name for f in arcpy.ListFields(fc)]

# Move a field to the front of a new list

reorg_list = ["facility_c"]+ [f for f in orig_fields if f != 'facility_c']

print([reorg_list])

In [ ]:

### OPTIONAL STEP

# Create a layer where we keep rows where the joinkey is unique

# this is more for analytic and QC purposes that we didn't lose data

# one row = one unique well id within one unique CTU

fc= "MN_WaterContamination_SpatialJoin" # input layer

outfc= "wells_per_CTU_unique"

# select fields without having to hardcode eachone

fields = [f.name for f in arcpy.ListFields(fc) ]

fields = ["JoinKey"] + [f for f in fields if f != "JoinKey"] # place the joinkey upfront

# Order by joinkey and lates_sam date descending

sql_c= (None, "ORDER BY JoinKey, latest_sam DESC")

# will store unique records as a dictionary with joinkey (well_id, CTU_ID) as the key and all row data as the values

unique_records = {}

with arcpy.da.SearchCursor(fc,fields, sql_clause=sql_c) as cursor:

for row in cursor:

join_id = row[0]

# check unique records dictionary, keeps the first joinkey to avoid duplicates

if join_id not in unique_records:

# store the entire row

unique_records[join_id]= row # store corresponding row and join key

sr= arcpy.Describe(fc).spatialReference

### TOOL: arcpy.management.CreateFeatureclass(out_path, out_name, {geometry_type}, {template}, {has_m}, {has_z}, {spatial_reference}, {config_keyword}, {spatial_grid_1}, {spatial_grid_2}, {spatial_grid_3}, {out_alias}, {oid_type})

arcpy.management.CreateFeatureclass(wksp, outfc, "POINT", template=fc, spatial_reference= sr) # template copies the fc schema-field types, lengh, order

try:

with arcpy.da.InsertCursor(outfc, fields) as icursor:

for row in unique_records.values():

icursor.insertRow(row)

except Exception as e:

print("Error occured", e, type(e).__name__)

except arcpy.ExecuteError:

print("Arcpy Error", arcpy.GetMessages(2))

In [40]:

cat.showFieldinfo(outfc)

In [63]:

## Now we focus on getting the latest sample reading across all points in each CTU

# Input feature class

fc = "MN_WaterContamination_SpatialJoin"

outfc = "wells_per_CTU_latest"

# Get all field names (preserving full schema)

fields = [f.name for f in arcpy.ListFields(fc)]

fields = ["Combined_GNIS_IDs"] + [f for f in fields if f != "Combined_GNIS_IDs"] # Ensure CTU ID is first

# Order by CTU ID and latest sampling date descending

sql_c = (None, "ORDER BY Combined_GNIS_IDs, latest_sam DESC, exceedance DESC")

# Store the most recent record for each CTU

unique_records = {}

with arcpy.da.SearchCursor(fc, fields, sql_clause=sql_c) as cursor:

for row in cursor:

ctu_id = row[0] # Grouping by Combined_GNIS_IDs

if ctu_id not in unique_records:

unique_records[ctu_id] = row # Keep only the first/latest well sample record per CTU

# Use original spatial reference and schema

sr = arcpy.Describe(fc).spatialReference

# Create the output feature class using a schema template

arcpy.management.CreateFeatureclass(wksp,outfc,"POINT",template=fc,spatial_reference=sr)

# Insert the filtered rows into the output feature class

try:

with arcpy.da.InsertCursor(outfc, fields) as icursor:

for row in unique_records.values():

icursor.insertRow(row)

print("Successfully created a new Feature Class", outfc, arcpy.GetMessages(0))

except Exception as e:

print("Error occured", e, type(e).__name__)

except arcpy.ExecuteError:

print("Arcpy Error", arcpy.GetMessages(2))

Spatial Join Analysis¶

arcpy.analysis.SpatialJoin()¶

- arcpy.analysis.SpatialJoin(target_features, join_features, out_feature_class, {join_operation}, {join_type}, {field_mapping}, {match_option}, {search_radius}, {distance_field_name}, {match_fields})

- Target features : features to be enriched with attributes

- Join features: features contributing attribute data

- Key optional arguments:

- join operation: Type of join either JOIN_ONE_TO_ONE or JOIN_ONE_TO_MANY. default one-to-one

- join type : Number of target records to keep either 'KEEP_ALL'(default, keep all records) or 'KEEP_COMMON' (only matched records are kept). Default keeps all fields

- field mapping: Use this to customize output layer fields, add, delete, rename, re-order,change properties, and combine fields

- match option: Intersect, Within, Contains, Closest, Closest_Geodesic,Are_identical_to, Largest_overlap...and more!

- search_radius: distance to search for matches

- match fields: pairs of fields to restrict the join to only those fields with matching values

In [64]:

### Goal: Create the final layer with the latest well data for the max exceedance value

# Will show the max contaminanat levels for each CTU based on the latest well data

# Spatial join to enrich the CTU layer with the latest max contaminant data

ctu_polygons = "city_township_unorg" # target features

latest_points = "wells_per_CTU_latest"# join features

out_fc = "CTU_latest_sample_summary"

try:

## target # join features

arcpy.analysis.SpatialJoin(ctu_polygons,latest_points,out_fc,join_operation="JOIN_ONE_TO_ONE",join_type="KEEP_ALL",match_option="INTERSECT")

print("Successfully joined the features", arcpy.GetMessages(0))

except Exception as e:

print("Error occured", e, type(e).__name__)

except arcpy.ExecuteError:

print("Arcpy Error", arcpy.GetMessages(2))